Two weeks ago, I shared the news about my chatbot v2, leveraging the Langchain framework, with FAISS as a vector store. It was a raw story about how I emerged from coding quicksand after months of iterative work.

While version 2 was a significant improvement from version 1, it still had a lot of issues that I am not happy with. The biggest is probably performance; more precisely, it is too slow. Also, the front-end interface was too complicated with too many introduction sentences so the chat box is way lower on the screen.

This post will highlight how 2.10 applies targeted performance optimizations and UX improvements to unlock a smoother, faster, and more capable conversational experience.

Table of Contents

- Transitioning to FastAPI: A Leap in Performance

- Asynchronous Processing: Smoother Conversations

- User Interface Enhancements

- Leveraging Langsmith for performance monitoring and observability

- The full back-end code for version 2.1

- Share this with a friend

Transitioning to FastAPI: A Leap in Performance

The backbone of v2.10’s enhanced performance lies in our shift to FastAPI. This modern web framework not only accelerates response times but also introduces a more robust and scalable architecture.

v2 Flask framework

# Initialize Flask app and Langchain agent

app = Flask(__name__)

CORS(app, resources={r"/query_blog": {"origins": "https://www.chandlernguyen.com"}})

app.debug = False

limiter = Limiter(app=app, key_func=get_remote_address)Flask served us well, providing simplicity and flexibility. However, as our user base grew, the need for a more performant solution became apparent.

v2.1 FastAPI framework

# FastAPI with advanced CORS and async support

# Initialize FastAPI app

app = FastAPI()

# Configure CORS for production, allowing only your frontend domain

origins = ["https://www.chandlernguyen.com"]

app.add_middleware(

CORSMiddleware,

allow_origins=origins,

# allow_origins=["*"],

allow_credentials=True,

allow_methods=["POST"],

allow_headers=["Content-Type"],

)FastAPI enhances performance through asynchronous support.

Asynchronous Processing: Smoother Conversations

Leveraging FastAPI’s asynchronous features, v2 introduces non-blocking request handling, allowing for smoother and more dynamic user interactions. An example is below.

# Function to check content with OpenAI Moderation API (sensitive keys are loaded from environment variables)

async def is_flagged_by_moderation(content: str) -> bool:

openai_api_key = os.getenv('OPENAI_API_KEY')

if not openai_api_key:

logging.error("OPENAI_API_KEY not set in environment variables")

raise HTTPException(status_code=500, detail="Server configuration error")

headers = {"Authorization": f"Bearer {openai_api_key}"}

data = {"input": content}

async with httpx.AsyncClient() as client:

response = await client.post("https://api.openai.com/v1/moderations", json=data, headers=headers)

if response.status_code == 200:

response_json = response.json()

return any(result.get("flagged", False) for result in response_json.get("results", []))

else:

logging.error(f"Moderation API error: {response.status_code} - {response.text}")

return FalseUser Interface Enhancements

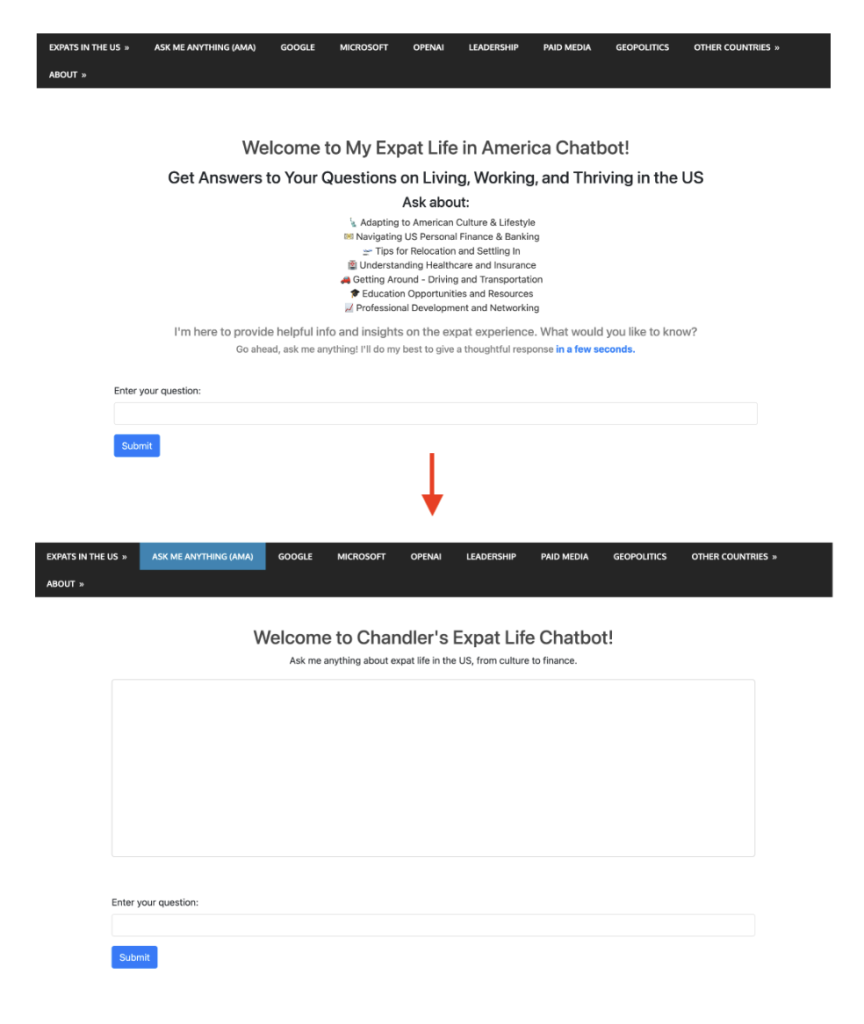

v2.10’s front end has been redesigned to enhance visual appeal and user engagement. With a focus on accessibility and user experience, the new design is simpler and more intuitive.

Reduction of excessive introductory content

This chunk of text is now gone.

<div class="container mt-5">

<h2 style="color: #454545; text-align: center;">Welcome to My Expat Life in America Chatbot!</h2>

<h3 style="margin-top: 15px; text-align: center;">

Get Answers to Your Questions on Living, Working, and Thriving in the US

</h3>

<h4 style="margin-top: 10px; text-align: center;">Ask about:</h4>

<ul style="list-style: none; text-align: center; color: #333;">

<li>Adapting to American Culture & Lifestyle</li>

<li>Navigating US Personal Finance & Banking</li>

<li>Tips for Relocation and Settling In</li>

<li>Understanding Healthcare and Insurance</li>

<li>Getting Around - Driving and Transportation</li>

<li>Education Opportunities and Resources</li>

<li>Professional Development and Networking</li>

</ul>

<h5 style="margin-top: 10px; color: grey; text-align: center;">

I'm here to provide helpful info and insights on the expat experience. What would you like to know?

</h5>

<h6 style="margin-top: 5px; color: grey; text-align: center;">

Go ahead, ask me anything! I'll do my best to give a thoughtful response <span style="font-weight: bold; color: #007bff;">in a few seconds.</span>

</h6>This is the replacement

<h2 style="color: #454545; text-align: center;">Welcome to Chandler's Expat Life Chatbot!</h2>

<p style="text-align: center; margin-bottom: 20px;">Ask me anything about expat life in the US, from culture to finance.</p>Visually, this is how the change looks like

Additional styles to reduce white space and improve the layout

<style>

/* Additional styles to reduce white space and improve layout */

body, html {

height: 100%;

margin: 0;

display: flex;

flex-direction: column;

}

.container {

flex-grow: 1;

display: flex;

flex-direction: column;

justify-content: center; /* Center vertically in the available space */

}

#conversation {

height: 300px; /* Adjust based on your preference */

overflow-y: auto;

border: 1px solid #ccc; /* Add border to conversation box */

padding: 10px;

border-radius: 5px; /* Optional: round corners */

}

</style>The #conversation box styling with a specified height and overflow control is particularly effective for maintaining a clean and user-friendly chat interface.

<!-- Area to display the conversation -->

<div id="conversation" aria-live="polite" class="mb-5" style="height: 300px; overflow-y: auto;"></div>Adding aria-live=”polite” to the conversation div is a thoughtful addition for screen reader users, allowing them to be informed of dynamic content changes.

Auto-focus on Input Field

The addition of $(‘#user-query’).focus(); at the end of the AJAX success and error callbacks is a user-friendly feature, ensuring that the input field is automatically focused after each message submission, allowing for a smoother chat experience.

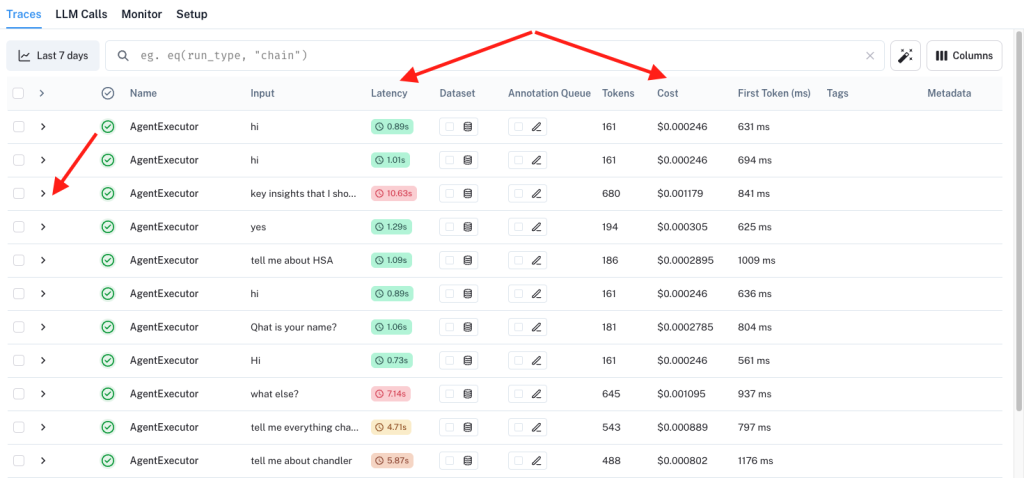

Leveraging Langsmith for performance monitoring and observability

Remember that I wrote about not being able to get Trulens eval to work? “I had to do this manually because I still couldn’t make trulens eval to work yet. I keep running into an error with an import tru_llama.py.“

With this version 2.10, I am leveraging langsmith. The set up is pretty easy and the dashboard interface is intuitive enough.

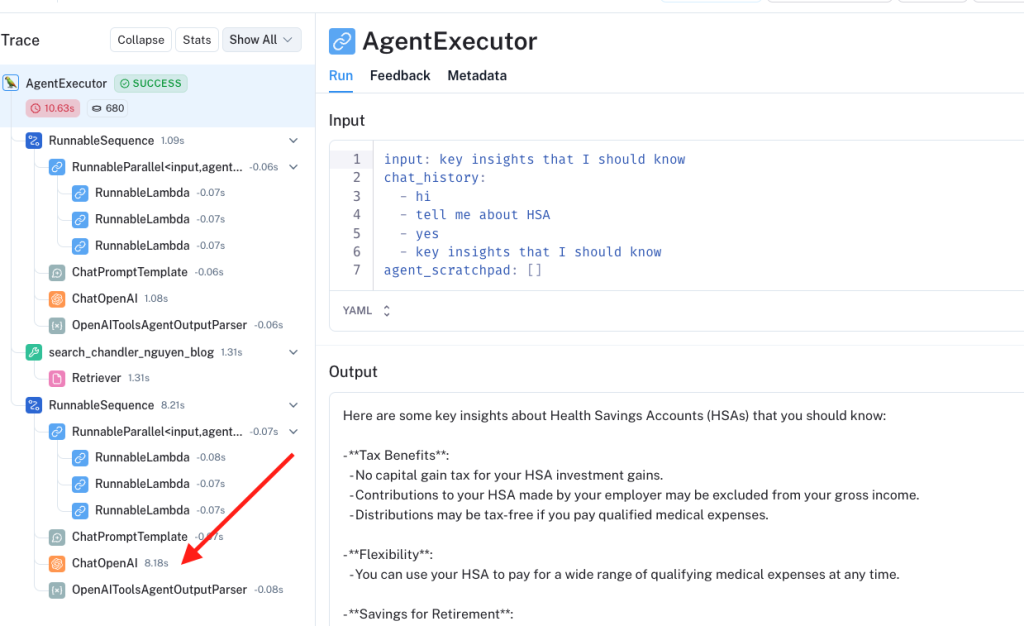

And whenever I notice that a certain query is taking too long, I can “click” on it and understand more exactly which part of the journey is the issue.

The full back-end code for version 2.1

Per usual, I shared the full back-end code on GitHub here. It is in the same repo as the previous version chatbot 2.0.

I invite you to take the new and upgraded chatbot for a spin. Ask it anything about what I wrote over the past 16 years. And please continue sharing suggestions so we can make the experience better together. Your insights guide this always-evolving project.

Share this with a friend

If you enjoyed this article and found it valuable, I’d greatly appreciate it if you could share it with your friends or anyone else who might be interested in this topic. Simply send them the link to this post, or share it on your favorite social media platforms. Your support helps me reach more readers and continue providing valuable content.

[jetpack_subscription_form]

Chandler