Reactions to "Bing AI Can't Be Trusted"

I fact-checked the fact-checker's claims about Bing AI fabricating financial data—turns out the made-up numbers problem is real and worse than I hoped.

This post was written in 2023. Some details may have changed since then.

I came across this article today Bing AI can't be trusted, and naturally, it piques my interest. It is a good article full of fact checks to show that the new Bing chat includes a lot of made-up facts about factual information. The post is relatively short, so go ahead and read it.

So a few quick reactions from me:

Surprised and not surprised at the same time

I am generally aware of the large language model (LLM) limitations which chatGPT is one. The three main ones are:

- It doesn’t index the web beyond text data (like video, audio, images, etc…)

- chatGPT data set is really old (2021)

- These models make up words because they don’t know which information source is more authoritative/trustworthy than others.

So I was hoping that with the Bing & OpenAI integration, Bing search machine could solve all of the limitations above. Well, it seems that based on Dmitri’s article, Bing hasn’t solved it yet. Not by a long shot. 🙁

Fact-check the article again

It would not be great to learn that what Dmitri mentioned was not factually correct either. So I went ahead and did a few fact-checks of my own. I start with Gap financial statements because it seems the most straightforward to check. I include the sources and screenshots below so that you don’t have to repeat this exercise:

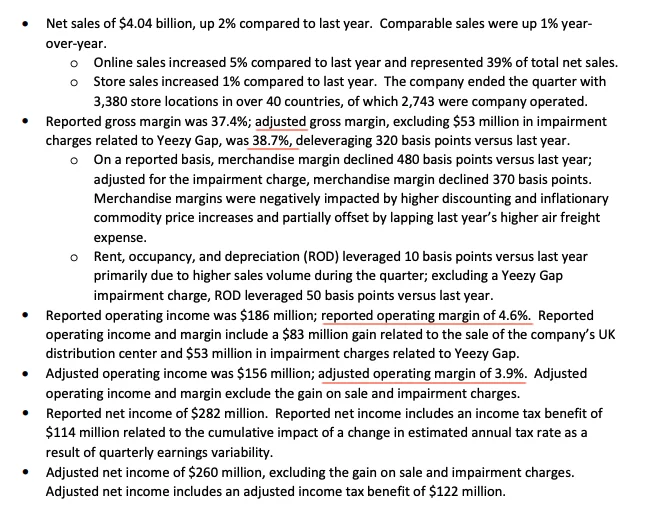

- This is Gap Q3 2022 earning release.

- I took the screenshot below from Gap statement and highlighted the key numbers in red. Dmitri is right, Bing chat made up the numbers like adjusted gross margin, operating margin, etc…

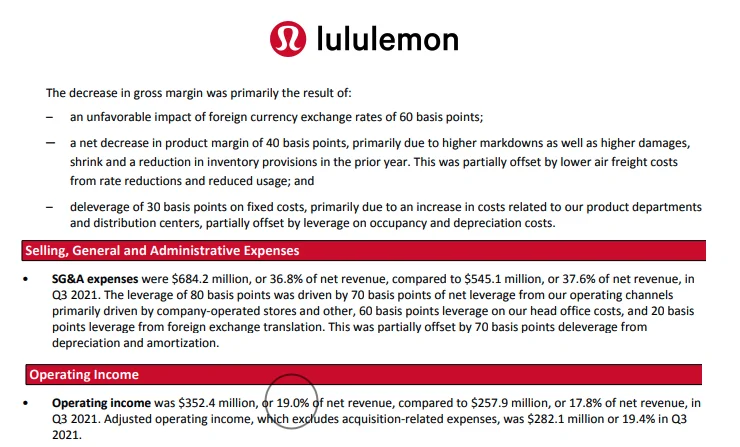

How about Lululemon numbers?

- This is Lululemon's Q3 2022 financial report. Same thing, i highlighted the key numbers mentioned in Dmitri’s article in the below screenshots. He is right, Bing search made up numbers.

As for the Mexico City itinerary, I am not an expert in this topic, so I can’t fact-check carefully. For example, when I searched for “Primer Nivel Night Club - Antro”, I found this Facebook page. But I have no way of verifying at 100% certainty if the suggestions from Bing Search are valid or not.

Where can we go from here?

It seems clear that at this point in time, Bing & OpenAI integration hasn’t been able to fix the issue of large language models (LLMs) just making up stuff as they go along yet.

I am not technical enough to understand how difficult it is to solve this issue. If it gets this inaccurate with factual data, we need to be careful about more subjective topics like best restaurants/plumbers/local services, personal finance, health, relationship, etc.

Now to be fair to Bing and OpenAI, they did say during the presentation that they understand that the new technology can get many things wrong, so they designed the "thumb up/thumb down" interface so that users can give feedback to them easily. Hopefully, with more user feedback, the machine will get better.

An algorithm to fact-check LLM output?

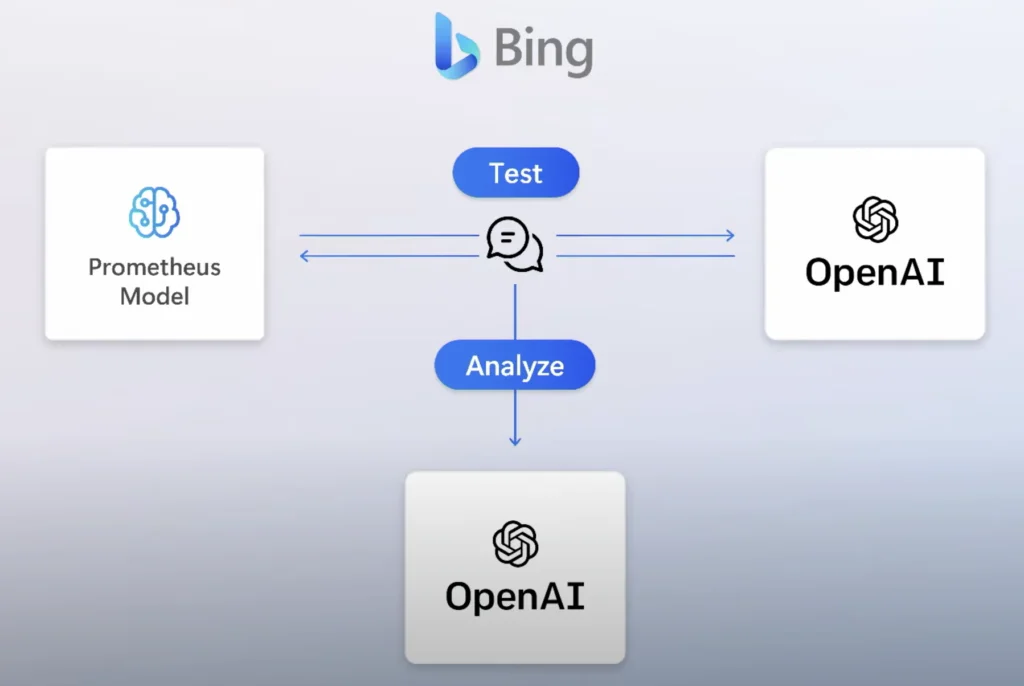

Since LLM often produces wrong output, how about creating an algorithm to fact-check the output continuously? This is similar to what Microsoft talked about the safety algorithm that they built into Prometheus, simulating bad actors' prompts to the machine.

The role of human

This technology seems to be at an early stage, and while the progress is exponential, the role of the human is critical. We can not trust the output yet, even with the Bing & OpenAI integration. The machine can help us with 50% of the desired outcome (more or less), but we need to put in another 50%.

There seems to be enough time for us to adjust, learn the strengths and limitations of this technology, and use it effectively.

As for the engineers, who design these systems, you probably need to do a better job of highlighting to the end users the data points and sentences that the machine is unsure about. Our human brains like shortcuts so I am sure that many of us (myself included) will take the lazy route and accept what the machine says as the truth :P It is hard for us to be on guard 100% of the time.

Have you caught any AI-generated answers that were confidently wrong? I'd love to hear your examples — the more specific, the better.

Cheers,

Chandler