US or China: who is leading in AI research?

Two major analyses reached opposite conclusions about US vs China AI leadership—but they measured completely different things. Here's why both could be right.

Recently I came across two articles with very different headlines and conclusions:

- China trounces U.S. in AI research output and quality. It was written by Kotaro Fukuoka, Shunsuke Tabeta and Akira Okikawa, Nikkei staff writers.

- Must read: the 100 most cited AI papers in 2022 by Zeta Alpha. The article wrote "When we look at where these top-cited papers come from (Figure 1), we see that the United States continues to dominate and the difference among the major powers varies only slightly per year."

- One of the conclusions from this article is that "Earlier reports (link to the Nikkei article) that China may have overtaken the US in AI R&D seem to be highly exaggerated if we look at it from the perspective of citations."

Naturally, this gets me curious because Nikkei is a reputable news organization, and Japan is a US military ally. In other words, the Nikkei has little incentive to "spin" the truth in favor of China. So I decided to dig deeper.

Well, as it turned out, I didn't need to dig that deep to know that two of them could be right at the same time because they used different methodologies to derive their conclusions. Given that Zeta Alpha published their article later and cited the Nikkei article, Zeta Alpha should have highlighted the differences in their methodologies directly in the article; and let the readers decide for themselves.

Different methodologies

Nikkei Methodology

Zeta Alpha methodology

Nikkei worked with Dutch scientific publisher Elsevier to review academic and conference papers on AI, using 800 or so AI-associated keywords to narrow down the papers.

To create the analysis above, we have first collected the most cited papers per year in the Zeta Alpha platform, and then manually checked the first publication date, so that we place papers in the right year.

We supplemented this list by mining for highly cited AI papers on Semantic Scholar with its broader coverage and ability to sort by citation count.

We then take for each paper the number of citations on Google Scholar as the representative metric and sort the papers by this number to yield the top-100 for a year.

Looking at quantity, the number of AI papers exploded from about 25,000 in 2012 to roughly 135,000 in 2021.

Zeta Alpha article focuses on the top 100 papers for each year only

Both Nikkei and Zeta Alpha use citations as an indication of the paper's quality.

But the first big difference is that Nikkei looks at a much larger number of AI papers than Zeta Alpha to draw their conclusion. When Nikkei wrote, "In 2021, China accounted for 7,401 of the most-cited papers, topping the American tally by 70% or so.", they referred to the top 10% of the papers, so basically a universe of 13,500 papers in 2021 (the top 10% of roughly 135,000 AI papers in 2021).

All of Zeta Alpha's analysis in their article is about the top 100 papers in terms of citation for each year only.

So this is not apple to apple comparison at all.

Which method is better, between Nikkei and Zeta Alpha?

I don't have a deep background in the AI field, so I can't say with confidence which methodology is better. I do know that they are different.

If you want to answer this question, I think you need to:

- First, define what criteria you are using to evaluate "better"?

- Covering a larger number of papers mean that your sample size is much bigger and covers a lot more niche areas within AI.

- Focusing on the top 100 papers may make sense if we think most commercial or strategic values will accrue to the top few papers/owners over time. But I doubt that Zeta Alpha has done this analysis.

- Second, to find a better way to quantify the value or impact of each paper rather than just using citations. I know that using citations is a crude way of evaluating quality but is it the best way?

- Third, what is the relationship between a country's AI capability and the percentage of its published paper among the top 100 or top 1000 cited papers in a given year?

- For example, I am sure that some of the most cutting-edge research with military and high commercial values, some research labs choose not to publish them. Because why publish them for others to learn, to help competition close the gap?

- I can go on, but I hope you get my point

Some questionable conclusions/headlines

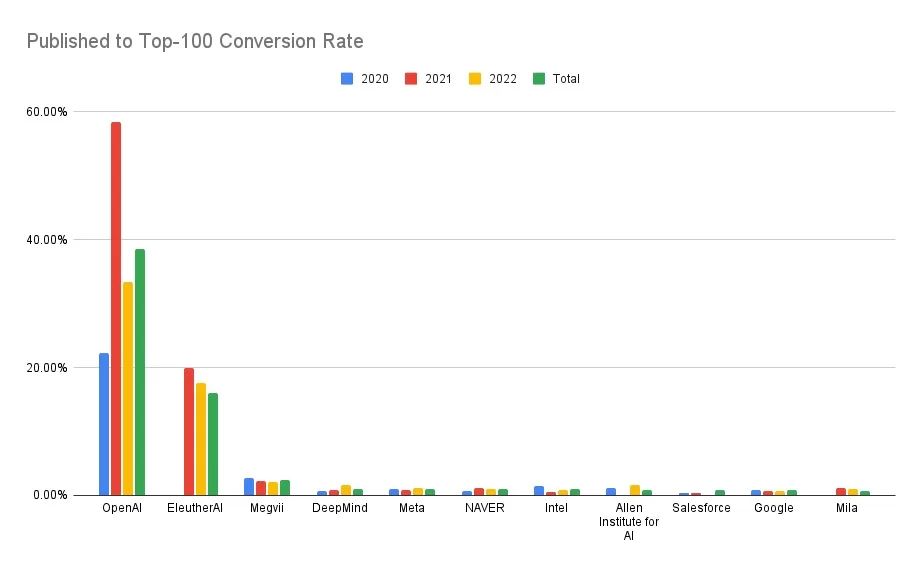

OpenAI is simply in a league of its own when it comes to turning publications into absolute blockbusters

Zeta Alpha wrote that "You won't see OpenAI or DeepMind among the top 20 in the volume of publications. These institutions publish less but with higher impact." and "Now we see that OpenAI is simply in a league of its own when it comes to turning publications into absolute blockbusters."

Why is this "conversion rate" important to look at? What does that mean? One simple way to interpret the data is:

- OpenAI research focuses on a very narrow field of AI, and they choose to publish a very limited number of papers.

- Google or Meta and other companies have a wide interest in AI, and they are simultaneously researching many different areas. And they choose to publish more.

- This has nothing to do with OpenAI's being good at turning publications into blockbusters.

Which way of working is better? I am not so sure

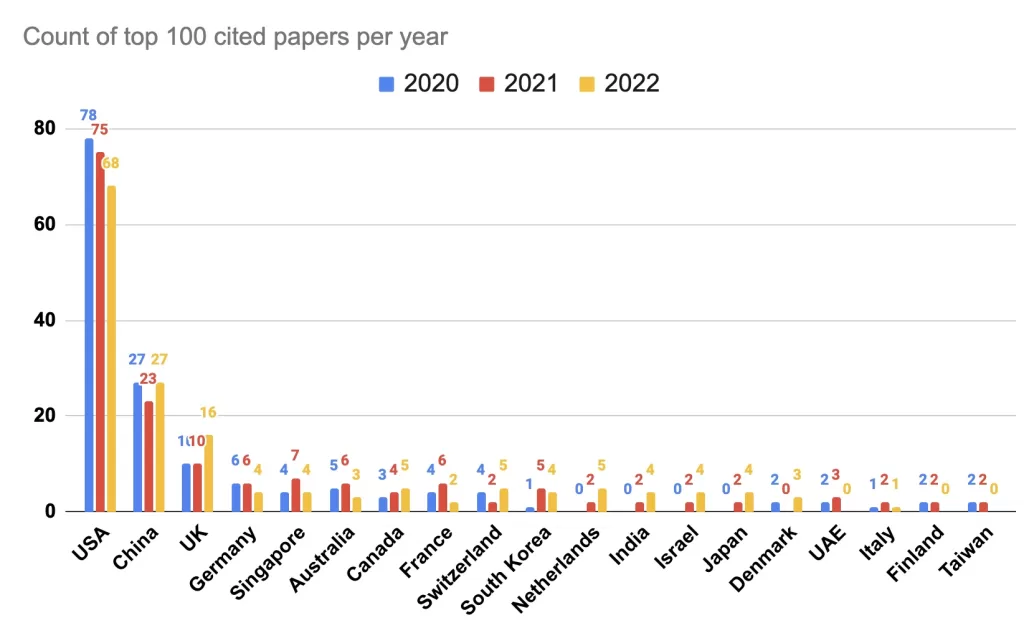

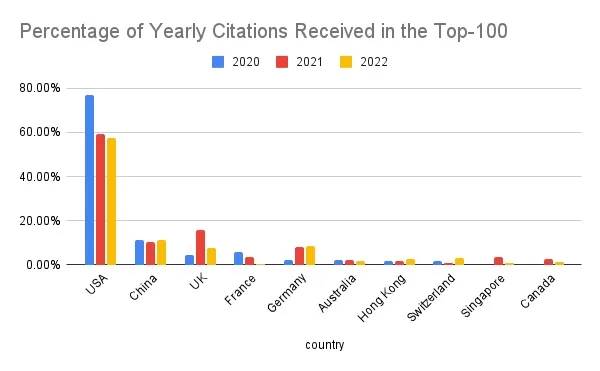

The US dominates AI research papers

This conclusion is based on two main data points below

As mentioned above, while I agree that having a strong presence in the top 100 (or 1000 or whatever the number) cited AI papers is a signal about the country's strength in AI. I don't think that should be the ONLY signal. There should be a suite of data points or signals to derive that conclusion.

Also why top 100 and not top 1000? Is it because Zeta Alpha's methodology involves manual checking so they can only cover the top 100?

Conclusion

This is another example of how real-life situation is a lot more nuanced than some headlines indicate. So while I appreciate the effort to simplify the story for the audience, we shouldn't try to "simplify it too much." :)

What do you think is a better way to measure a country's AI capability? Is the number of top-cited papers enough, or should we look at a broader set of signals? I'd love to hear your thoughts.

Cheers,

Chandler