My 4-Week Journey: From Frontend Upgrades to Docker Struggles and Breakthroughs

I rebuilt my site's frontend with AI, upgraded my chatbot's intelligence, and discovered continuous deployment—all because Docker refused to cooperate.

Update (2026): The WordPress frontend and Docker deployment described below are ancient history. The site now runs on Next.js, and Sydney has been rebuilt from scratch with Supabase pgvector and Claude. Still coding, still learning.

Original post from Mar 2024 preserved below for context.

I haven't published any posts over the past 4 weeks. You might be wondering what happened. Did I get lazy and stop learning? Well sort of, I did go on a trip with my family and other friends over one weekend.

Today's post is going to be a short one. I will share what I have learned over the past 4 weeks. But who cares? Well, it is true that no one will care so this post is mainly for me to keep track of my progress and I guess one more person (whoever you are :D.)

TL;DR: "Just in time learning" is real and I am living it.

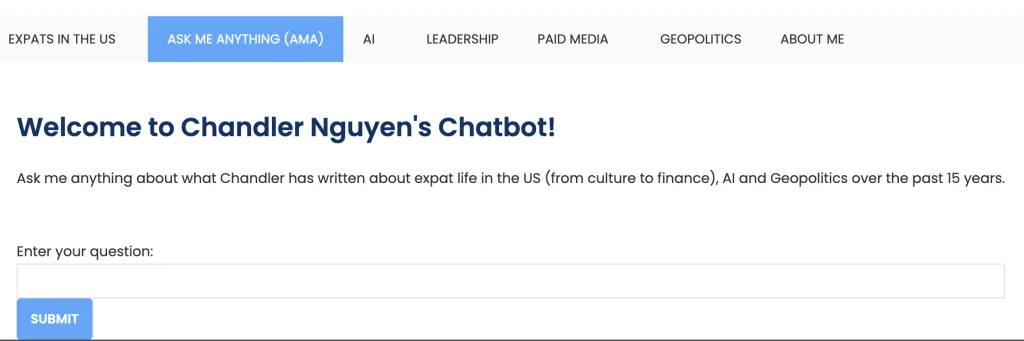

Improved front end

If you came to my site a few months ago, you would notice that right now, the site looks different, more modern, and easier to navigate (I hope). I used Github Copilot and ChatGPT4 to ask the machine to help improve the site's look and feel. The result is more than 300 lines of CSS codes, most of which I don't know who to write. Here's a sneak peek:

/* Defining CSS variables for common values */

:root \{

--main-font: 'Poppins', sans-serif; /* Main font for the website */

--primary-color: #003366; /* Primary color for text */

--accent-color: #4da6ff; /* Accent color for links and buttons */

--background-color: #FAFAFA; /* Background color for the body and some elements */

--text-color: #333333; /* Main text color */

--hover-color: #4da6ff; /* Color for hover effects */

\}

/* Applying the main font and color to various elements */

body, button, input, select, textarea, h1, h2, h3, h4, h5, h6, .nav-menu, .nav-menu a, .widget-title, .page-numbers.current,

#primary-menu .menu-item a, #primary-menu .sub-menu .menu-item a, .site-title a, .primary-navigation a, .widgettitle, .simpletoc-title \{

font-family: var(--main-font);

color: var(--text-color);

\}

Also when I visit the chatbot page, the "look and feel" is similar to the rest of the site. The "box" to enter your question is right up there, above the fold and the conversation box keeps expanding as the conversation expands. I couldn't believe that I didn't get it done sooner. And so sorry for everyone who visited that page before.

Sydney the chatbot has a new vector store and improved query translation

The entire process from exporting published content from Wordpress to HTML clean-up, chunking, generating embeddings, and storing them in a vector store is surprisingly easy and fast once you have done it a few times.

I switched out a new vector store (still using FAISS), with a different chunking methodology (this time with a slightly higher chunk size and overlap.)

The user's query is transformed using multi-query retriever from Langchain. I have found the chatbot answer using this retriever to be more comprehensive and nuanced than the previous retriever.

# Set up vector store and llm

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.load_local("faiss_index", embeddings)

llm = ChatOpenAI(model_name="gpt-3.5-turbo-0125", temperature=0)

# Set up retriever

retriever_from_llm = MultiQueryRetriever.from_llm(

retriever=vectorstore.as_retriever(), llm=llm

)

Struggle with pushing Docker image to GCP Artifact turned out to be a blessing

For some strange reason, I started having trouble with pushing Docker Image to GCP Artifact about 1 month ago. I tried many ways to resolve it but couldn't. The months up until that point were completely fine.

Because of that, it forced me to think of another way to deploy the app, one that doesn't involve pushing the image to Artifact. That was how I came across continuous deployment on GCP Cloud Run using the repo on Github.

Somehow at the same time, I watched an online tutorial and they used poetry. I had no idea what it was so I asked chatGPT to explain that to me. It turned out to be a great tool to manage dependencies so now I am using it :). Because of this exploration, I learned more about setting up a virtual environment for each project too, and started to use it for every single project.

So now with setting up a new virtual environment, using poetry to manage dependencies, and leveraging continuous deployment from GCP Cloud Run, my workflow is a lot smoother. Deploying a new update to the chatbot is also a lot easier and faster, once I test it thoroughly locally.

What am I working on now?

Right now, I am working on building and deploying a research assistant using Langchain. The open-source code from Langchain and GPT-Researcher (thank you both!) is clear enough and I can make it work locally. However, deploying it to work in a production environment turns out to be quite difficult, especially the part about content scraping. Specifically, the CPU usage is way too high when I test the application in Docker Container locally.

I also have a few ideas on how to improve the research assistant approach shared by GPT-researcher and Langchain but I need to work on them to bring them to life. I will share more when they are ready.

Your thoughts?

Every line of code, every troubleshooting hour, and every breakthrough has been a stepping stone. And while this journey is mine, I hope it sparks ideas, offers insights, or simply entertains. What challenges have you faced in your tech endeavors? Drop your stories or questions below – let's navigate these digital waters together.

Cheers,

Chandler

P.S: if you are building a RAG application, I find the "RAG from scratch" series from Langchain super useful.