Google Gemini 2.5 Pro is now my go-to coding partner

After 5,000 hours testing AI models, Gemini 2.5 Pro beats Claude and ChatGPT for coding—here's why it became my default tool for building complex applications.

It is hard to imagine that ChatGPT was launched only in late 2022. So much has changed since then. As someone who has spent (probably) 5,000 hours working alongside multiple GenAI models over the past 3 years, I can "feel" the step change with Google Gemini 2.5 Pro. It is now my go-to tool for coding, vs Claude 3.7 Sonnet (including Claude Code) or DeepSeek R1 or OpenAI o1 or o3-mini.

This preference didn't come from a single "eureka" moment, but rather from the cumulative experience of working with different models day after day. The code quality, the long context window, the speed, and the thoughtful UI all add up to make Gemini 2.5 Pro stand out for my particular needs as a developer.

This is my personal "feeling" - no benchmarking was conducted for this post.

Background

Because this post is about my feel for different tools, I think it is important that you understand my background and how I use different Gen AI tools. I am a middle age advertising professional (yeah I am past 40 so no matter which definition of young adult that you are using, I am not qualified T.T) And I have been learning to code over the past few years. I completed some fundamental courses like: Google IT Automation with Python, Google Cybersecurity Specialization, Machine learning specialization, etc...

To apply what I have learnt in real life, I built RAG agent using Langgraph, which also can answer questions about what I have written on this blog over the past ~20 years and also financial questions about the Magnificent 7 in the S&P 500. My stack at a high level for this agent is:

- Database: Weaviate (for vector store database and hybrid search), PostgreSQL on Google Cloud

- Agent orchestration: Lang Graph

- CI/CD: Google Cloud Run on GCP

- Front-end: React

What I am working on

Over the past few months, I have been working on an application that is a bit more complex. I tried to build it using Lang graph but the performance is just not up to what I am expecting, mainly around speed/responsiveness. So right now, my overall architecture is:

Backend architecture

- Hybrid Database Approach: I've implemented a hybrid database architecture that combines PostgreSQL (for user data and transactional integrity) with DynamoDB (for scalable state management)

- Serverless Workflow Orchestration: Moving beyond basic agent patterns, I'm using AWS Step Functions to coordinate complex, multi-stage workflows with proper error handling

- Credit-Based System Implementation: Added a credit-based freemium model with proper transaction management

- VPC Configuration: Set up proper network isolation with security groups and VPC endpoints

Front end improvement

- Modern React Stack: Using Next.js 15 with React 18 and TypeScript for type-safe development

- Authentication System: Integrated AWS Cognito for secure user management

- Polling & State Management: Implemented efficient status tracking with adaptive polling frequencies

- Responsive Design System: Created a minimalist, clean UI with consistent styling patterns

Why did I decide on using AWS and not GCP when I tried to move away from Langgraph, well, it was/is simply because I wanted to learn new things. I have some knowledge about GCP from hosting this website on it and using it for the current Agent. So I wanted to learn something new completely.

Why Gemini 2.5 Pro stands out for coding

While others can give you benchmarks, I can give you my feelings about how I feel that Gemini 2.5 Pro is better.

The actual code is better

Given the same prompt and context, the code response from Gemini 2.5 Pro is better (or at least equal to) than DeepSeek R1 or Claude 3.7 Sonnet. I stopped using OpenAI o1 or o3 because the quality is just way worse.

What I particularly appreciate about Gemini 2.5 Pro is its willingness to generate complete, ready-to-use code. Both Claude 3.7 Sonnet and DeepSeek R1 can be quite "lazy" at times, offering partial implementations or pseudocode that requires significant modification. For someone like me without deep technical expertise, especially on the backend, this creates an additional challenge. I then need to hunt through my codebase to find the right spots to edit or expand the partial solutions they provide.

Gemini 2.5 Pro, on the other hand, tends to provide fully-implemented solutions that I can often copy and paste directly into my project with minimal adjustments. This complete code generation saves me significant time and reduces the cognitive load of having to fill in the gaps myself.

The inference time/speed is better

DeepSeek has a scale problem. Perhaps because of too many people using it and it is not running on the latest Nvidia chips for inference, it is much slower and often shows server is busy error message. Gemini 2.5 Pro on the other hand is fast, extremely fast. Claude 3.7 Sonnet the web version is as fast as Gemini 2.5 Pro and Claude Code is a bit slower.

Extremely long context window length (which means more chat iterations)

Claude 3.7 Sonnet is good but I often run into chat depth or context window length limit. One way that I try to cope with it is to ask the model to write documentation clearly to pass the next task to another "backend developer" or "front end developer" to work on and then CREATE a new chat. This starts to become tiring very soon. Also we all know that we need to debug and can't trust the code generated from GenAI 100% of the time yet, especially when it comes to integration between backend and front end. But if the context window length is too small and you have to start new chat every time, the machine may not have the full context to identify the bugs.

On the contrary with the 1M context window length (free tier) from Gemini 2.5 Pro, I can continue to iterate, copy and paste codes, error messages in and ask the model recursively. This has greatly improved my speed and code quality. :D

(One quick note though that I do notice a significant slow down in inference time and UI responsiveness once I cross over 300k or 400k token per prompt.)

Update Apr 4: The UI responsiveness has improved a lot over the past 48 hours. Now, even at 300k token, it seems to run smoothly!

Cost considerations

Right now, Gemini 2.5 Pro is FREE to use. To recall that I used to pay OpenAI $200/month back in late 2024 to use their Pro model and the quality was not as good as Gemini 2.5, was insane T.T

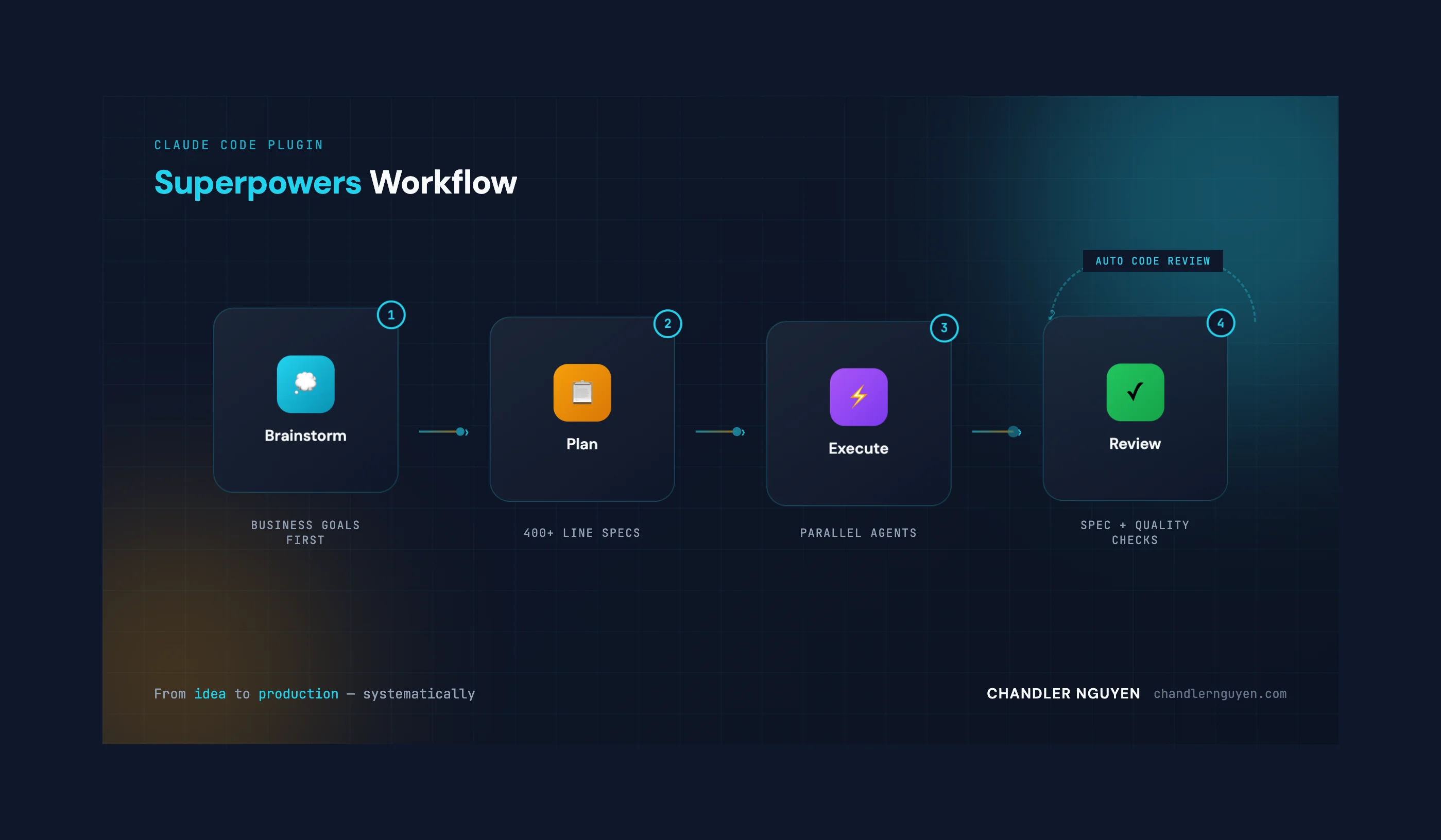

Claude Code is good but it is very expensive. It is very easy to spend $5 or $10 every hour or so working along side Claude code so it is not feasible for me yet. The cost adds up easily.

UI advantages of Gemini 2.5 Pro

I am using Gemini 2.5 Pro via AI studio from Google. Compare that to DeepSeek R1 or Claude 3.7, I appreciate the thought and the attention to details that went behind the UI. Here are some examples of what I like:

Token count display

The token count in the prompt so far. So I know that the max is about 1M token but how far I am against the max? How far I can continue to go on before I have to tell the machine to write a documentation to sum up what we have done so that I can continue in another New chat?

Temperature control

It is right there below Token count. Perfect, easy for me to adjust.

Keyboard shortcuts

"Command + Enter" to run prompt on Mac OS: Great. Now you speak my language because so many times, I accidentally pressed "Enter" but I meant to create a new line so that I can paste more content into the chat. (You can say that it is easy to learn to use Command + Enter for new line, as in the case of Claude but well, I am a bit quirky.)

Output length control

Again fantastic because some times, I want short answer and sometimes, I want much longer response because I want the actual codes across multiple files.

Copy function

Even the copy function is better. It has "Copy markdown" which is what developer often wants to use!

I will stop here but I guess you get the gist. This UI is very much suitable to Developer and I appreciate it. It is much better to me vs ChatGPT or DeepSeek or even Claude. I really like Claude but I guess the main drawback is not knowing the token consumption thus far vs the limit.

Looking ahead

As I continue building more complex applications, the quality of my AI coding partner becomes increasingly important. While all models will inevitably improve, Gemini 2.5 Pro's combination of code quality, long context window, and thoughtful UI has given it a significant edge for my development workflow.

The real test will be seeing how these models handle even more complex systems as I continue to push my own boundaries. (That test arrived when I built a native iOS app without knowing Swift using Claude Code — the AI handled the scaffold, but the gap between "working code" and "finished product" turned out to be where all the real work lives.)

So there you have it. That is why in a short period of time, Gemini 2.5 Pro won me over and now my go-to tool for coding. :D

I'd love to know — what's your go-to AI coding tool right now? And has it changed in the past few months? I feel like the landscape is shifting so fast that what works best today might not be the same answer next quarter. Let me know your thoughts!

Cheers,

Chandler

P.S. I tested Github Copilot Agent too but I don't like it as much because as of now, the limit per chat is really small and the inference speed is very slow. I run into Claude 3.7 Sonnet limit very often and it doesn't have Gemini 2.5 Pro yet.